Self-Supervised Geometric Perception

Heng Yang*, Wei Dong*, Luca Carlone, Vladlen Koltun

CVPR 2021 (Oral)

Abstract

We present self-supervised geometric perception (SGP), the first general framework to learn a feature descriptor for correspondence matching without any ground-truth geometric model labels (e.g., camera poses, rigid transformations).

Our first contribution is to formulate geometric perception as an optimization problem that jointly optimizes the feature descriptor and the geometric models given a large corpus of visual measurements (e.g., images, point clouds). Under this optimization formulation, we show that two important streams of research in vision, namely robust model fitting and deep feature learning, correspond to optimizing one block of the unknown variables while fixing the other block.

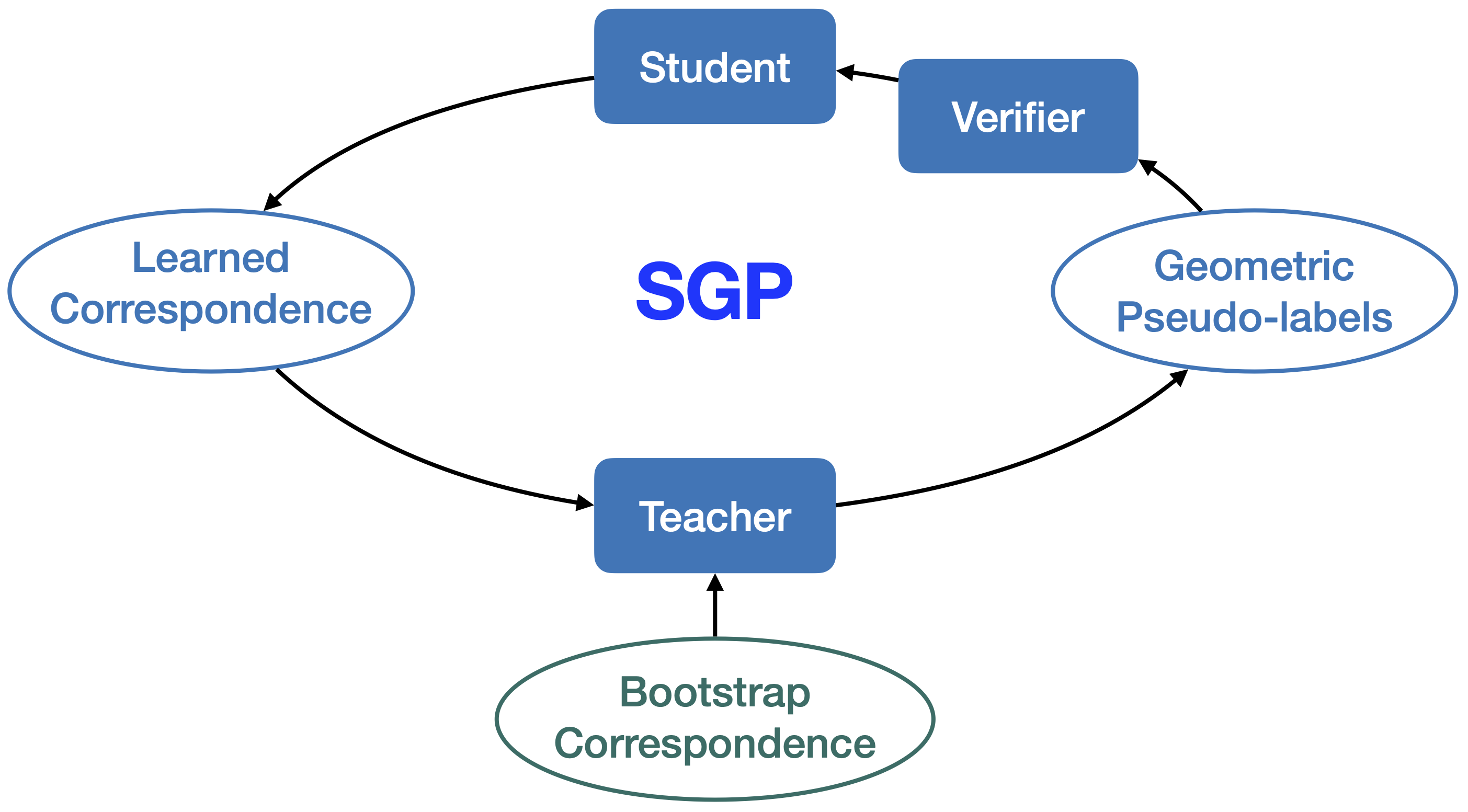

This analysis naturally leads to our second contribution – the SGP algorithm that performs alternating minimization to solve the joint optimization. SGP iteratively executes two meta-algorithms:

- a teacher that performs robust model fitting given learned features to generate geometric pseudo-labels,

- a student that performs deep feature learning under noisy supervision of the pseudo-labels.

As a third contribution, we apply SGP to two perception problems on large-scale real datasets, namely relative camera pose estimation on MegaDepth and point cloud registration on 3DMatch. We demonstrate that SGP achieves state-of-the-art performance that is on-par or superior to the supervised oracles trained using ground-truth labels.

Citation

@inproceedings{yang2021sgp,

author = {Yang, Heng and Dong, Wei and Carlone, Luca and Koltun, Vladlen},

title = {Self-Supervised Geometric Perception},

booktitle = {CVPR},

month = {June},

year = {2021},

pages = {14350-14361}

}